The map is not the territory. That’s become a cliche. Yet the map is not entirely separate from the territory. Even the most distorted map came from somewhere.

There is a famous theorem of algebraic topology called the Brouwer Fixed Point Theorem that says that given any continuous function from the closed unit ball onto itself, there will be at least one point for which f(x)=x. That is, one point stays the same. That means that if you have two copies of a painting and crumple one up and place it atop the other, at least one pixel will be directly on top of its twin.

The conceptual maps and stories we make of the world start outside of concepts: in the senses and embodied experiences. The child’s emerging ideas meet frequent reality checks. As we get older, modern human beings operate more and more in a world of abstractions, symbols, concepts, and representations. Modern society, immersed more and more in a digital realm that is nothing but symbol, is especially prone to drift off into deranged illusions, entire narratives and worldviews barely connected — perhaps at a single Brouwerian point — to anything real.

The internal logic of a map or belief system is no guarantee of its sanity, as an encounter with your neighborhood Flat Earth theorist or a Q-anon will confirm. Their worldviews are maddeningly self-consistent.

It is not just those I could conveniently slander as cranks and conspiracy theorists who wander in a distorted map. Society’s standard belief systems have detached just as far from reality.

Recent insights into generative AI based on large language models (LLMs) illuminate what has happened to society more generally over the course of the last hundred years.

The ongoing revolution in artificial intelligence, rather than enabling us finally to corral the deluge of digital data into more sensible structures, threatens to create a whole new level of nonsense. A very recent paper by researchers at Stanford and Rice universities makes the case that the more that generative AIs train on synthetic data produced by generative AIs, the more they lose quality, diversity, or both in their output. In other words, the images they generate diverge more and more from real-looking images, and their variety collapses toward greater and greater sameness. The researchers call this process an “autophagous loop,” in which the AI consumes itself and goes mad:

In the language of mathematics, at one extreme, an autophagous loop is a contraction mapping that collapses to a single, boring, point, while at the other extreme it is an unstable positive feedback loop that diverges into bedlam.

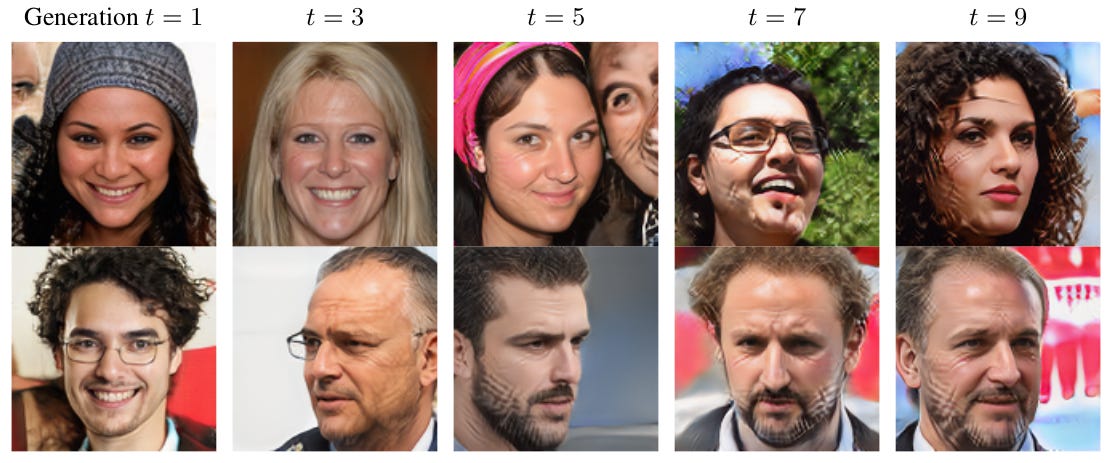

Homogeneity or bedlam. These are the endpoints of a complex system cut off from inputs of novelty. The article displays examples of images generated by iterative models that bring their own synthetic images into the training set. Artifacts are amplified, diversity decreases, and strange distortions develop that become normative as they too join the training set. You’d think that the LLM would get better with each iteration, but it is the opposite. It gets worse at generating faces. Notice the cross-hatching on the faces in the later generations.

The researchers argue that this could easily start happening outside their experiments. After all, the internet, from which LLM’s scrape their training data, is increasingly populated by synthetic text and images. The day will soon come when there is more — possibly much more — synthetic data on the internet than real (human-generated) data. More fake images than real images. More LLM-generated text than text authored by human beings.

The perverse result could be that the AI technology designed to mimic human creations (speech, images, behavior) and generate human faces gets worse and worse at it the more it is deployed. The only way to prevent this from happening, according to the researchers, is to have sufficient quantities of fresh, real-world data constantly entering the training set.

People already notice a kind of triteness in synthetic art. It seems derivative. AI-generated poetry can be amusing, even clever, but no one would describe it as inspired. ChatGPT generated articles have a tell-tale jejune quality we quickly learn to recognize.

All of this would be more disturbing were it not for the fact that human-generated art and writing has also trended in the same direction. The descent into “homogeneity, bedlam, or both” was underway long before AI existed. AI further extends, entrenches, and normalizes the already degenerating cultural output it uses as its learning model. Society as a whole has followed a similar path to autophagous AI, endlessly devouring and regurgitating the same tired elements of its cultural legacy in ever more convoluted labyrinths of self-reference. It is as if the training dataset of the cultural imaginary is suffocating from lack of fresh input. People create internet memes out of other memes about representations of representations of representations of something that once was real. We make movies about toys based on movies based on comic books that drew originally from an authentic creativity arising outside the symbolic realm. Having lost much of our access to that, we cannibalize our cultural inheritance instead. The digital map of reality is so crumpled and torn that it is hard to find the Brouwerian fixed point that tethers it to anything real.1 Not even Guy Debord could have imagined that society could become so completely lost in its spectacle.

Artificial intelligence is merely the culmination of a long journey from reality into representation, from sense into symbol. It accelerates a process long underway: the involution of modern culture as it loses touch with its source and becomes about itself, degenerating into homogeneity and chaos.

As above, so below. I’ve noticed that the same thing happens in my own mind. I can get lost in a maze of concepts about concepts that careers off into fully self-consistent but objectively crazy delusions. The only thing that can reverse that process is an abundant influx of new information from outside my inner LLM training set. That is to say, I have to reconnect to the proto-conceptual sea of information of sense, spirit, body, and breath. Otherwise, I soon go as crazy as our society is, and the world I see through my cognitive filters degenerates into delerious chaos or vulgar sameness. Either nothing mkes sense at all, or every idea seems trite, tired, and derivative. It is a spiritual suffocation that traps me in a hell of finitude. (Those who aspire to upload their consciousness onto a neural net have a nasty surprise awaiting them if, God forbid, they succeed. To be is not to compute. Reality is not reducible to data. Creation is not finite. But they will have locked themselves into a finite prison from which they will be unable to escape. They will agonize for the lost dimensions of the infinity of relational beingness.)

To some degree, most denizens of modern society feel something of this yearning to break out of the homogeneity and/or nonsense of a belief system that is suffocating for lack of sufficient ventilation to embodied experience.

Is the present essay an example of what I’m speaking of? To paraphrase Debord, any critique of the Spectacle becomes part of the Spectacle. Or as Philip K. Dick said, “To fight the Empire is to be infected with its derangement.” I hope the reader will affirm that this essay is not merely a reshuffling of the elements that an LLM could perform. I could not have written it if I hadn’t received input from the ocean today. Like any true artist, I am a vessel for the ongoing process of creation. Something new comes in, not from me, but through me. If I only sit in my office and think, drawing from the legacy real data and the synthetic data derived from it without adding new data from outside it, all I can do is reshuffle the bits.

That is what large language models do. They reshuffle the bits. In so doing, they accomplish much. They are a lot better at shuffling bits than human beings are. If the human being were indeed the brain, and if the brain were indeed a wetware bit-shuffling device, then indeed there would be nothing a human could say or think that a computer could not.

Today we are on a steep ascent curve in the development of artificial intelligence. Giddy with success, we cannot easily see AI’s limitations. Its dangers are obvious, but its limitations are only just starting to come into view. The soullessness of synthetic art intimates an aspect of that limitation. What imparts soul to art or inspiration to poetry? These are dynamic, relational properties. The Mona Lisa was a magnificently ensouled painting. Its endless reproduction is not. The second- and third-order imitations of art composed by AI do not merely copy human-authored images; they copy and recombine stylistic elements, principles, and themes. Humans do that too of course, but we are not limited to that. A true creator adds something new to what has come before. Culture does not just reshuffle itself. It incorporates novelty from beyond itself. Cultures that shut out that novelty wither and degenerate in the same way that the researchers’ images did — into homogeneity or bedlam.

Information technology has enabled us to become the masters of quantity. Quantitative data makes a map of the world, representing people and things, words and movements, faces and voices, locations and moments with numbers. As our ability to extract quantities and label objects has grown, so has the conviction that the world is quantity. Accordingly, we exile the qualitative, the analog, and the non-representational from consideration, and in so doing choke off the source of new information that keeps mind and culture vital.

We humans will not and should not cease our map-making. We will not and should not abandon the tools of representation and quantity. But we must recognize their limits. Information is more than data, and intelligence is more than computation. We, the wielders of AI tools, must stay outside the autophagous loop; we must learn from beyond the tools’ output. We must not rely on AI computations to make sense of things. We must open further — as individuals and in social and political processes — to information that cannot be packaged as numbers and labels and pixels and bits.

Does such information even exist? A pillar of scientific metaphysical ideology is that everything is measurable. From within the digital matrix, it seems that everything is. When I feel a breeze on my face and soil beneath my feet, when I watch a hummingbird hover and dart in the hydrangeas, when I wriggle in the ocean water or gasp in pain of a bee’s sting, I know otherwise. The way to keep the digital world sane is to draw from outside of it.

For the mathematical sticklers out there, in fact if the map is torn, the Brouwer Fixed Point theorem no longer holds. That would break the requirement that the function be continuous. I thought about extending the metaphor to include this idea but it was too much of a stretch. (Stretching is allowed though, ha ha. As long as the domain is homeomorphic to the closed unit ball, the theorem holds.)

Nice article, Charles. 'Nice' in a non-AI generated way. Maybe. Can you tell? Or not? Does it matter?

As I reflected on this I was reminded of the arguments John Ralston Saul made about 'reason' creating similar distortions. For the same basic reasons you describe here: reason quickly and easily close loops itself outside of the tangible somatic world. For example, it is easy to see that woke ideology is itself a comparable form of nth generational closed loop 'thinking'. I saw that woke delusion clearly with MBA / accountancy modeling being enacted in the large corporation I worked at in early 2000. And I saw it in the bankruptcy of firms that had thrived for 100 plus years that died within a few years of MBA-itis policies. (Now it is the 'reasonable' diversity and green wackiness that is being mandated outside of grounded feedback.) [See *Voltaire's Bastards: The Dictatorship of Reason in the West* by John Ralston Saul. A prescient and excellent light brought to the making of delusion as reasonable. https://www.goodreads.com/book/show/6584.Voltaire_s_Bastards. Also great is *The Unconscious Civilisation* https://www.goodreads.com/book/show/421422.The_Unconscious_Civilization.]

Because the so-called 'science of business' had detached itself from reality feedback processes, there rose to a godhead the expert who floated in the clouds looking at their minions "from the 30,000 ft level", as was actually said to us once, as it proceded to destroy the department and eventually the business.

And woke gender craziness is the same: because there is no such thing as biological gender, we will joyfully destroy your hormones and cut your body apart in order to reassemble you into the 'proper' gender in order to ease your gender confusion!!!! That is absolutely equivalent to the nth regenerative AI distortion (delusion) you discussed.

Thank you.

"Where is the wisdom we have lost in knowledge? Where is the knowledge we have lost in information?" T.S. Eliot, 1934.